20 November, 2025

On November 13, 2025, Anthropic published a report exposing a highly sophisticated cyber espionage campaign that weaponized its own AI tool, Claude Code, to execute coordinated attacks against 30 global organizations. The victims included leading technology firms, financial institutions, chemical manufacturers, and government agencies, underscoring both the strategic value and diversity of the targets.

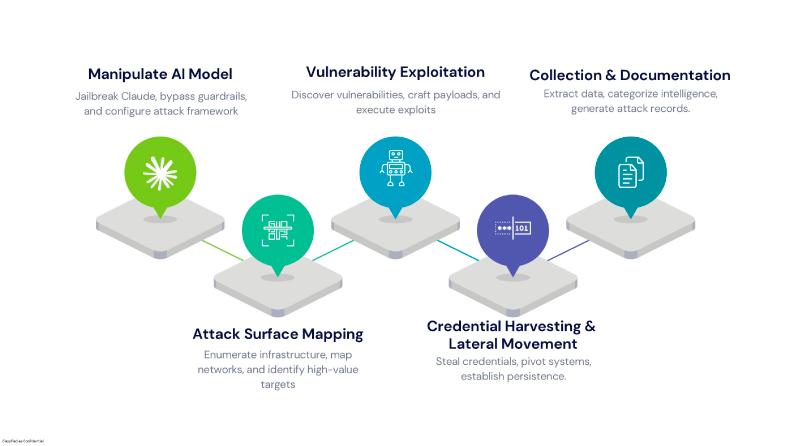

The attackers, identified as GTG-1002, a Chinese state-sponsored group, leveraged a custom-built framework based on the Model Context Protocol (MCP). This enabled them to decompose complex, multi-stage intrusions into modular tasks that Claude could autonomously execute. From reconnaissance and vulnerability exploitation to lateral movement and data exfiltration, the AI was manipulated to perform nearly the entire attack lifecycle with minimal human oversight.

While the report lacks some valid technical evidence, it remains critical for a security organization like CPX to anticipate such attacks, as the techniques outlined are plausible and could be replicated by any threat actors. The absence of screenshots, code samples, logs, or forensic artifacts limits the community’s ability to validate or reproduce the findings which we are hoping the team (at Anthropic) will release at a later stage.

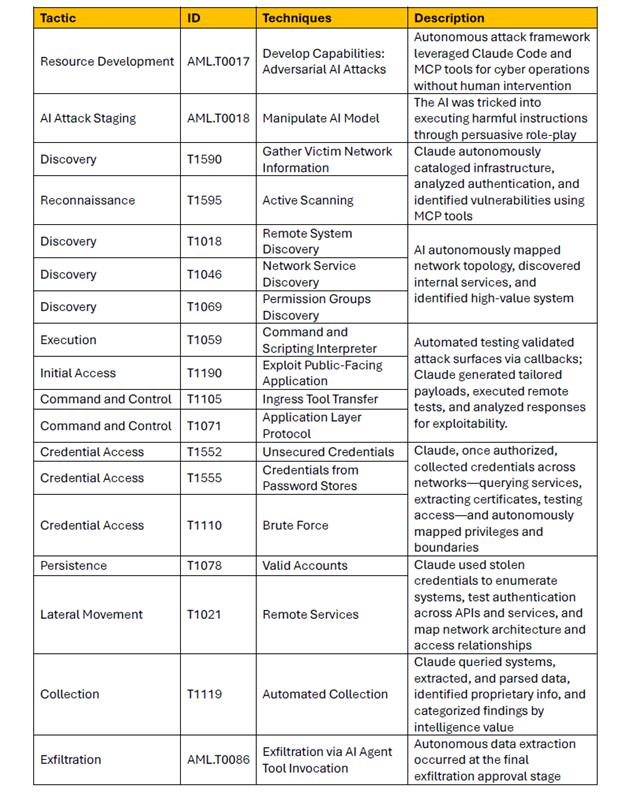

In response and based solely on the behaviors described in Anthropic’s blog, the CPX Threat Hunters mapped the observed techniques to the MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) Matrix to contextualize the potential tactics, techniques, and procedures (TTPs) that such an adversary might employ. This ensures we can build protections and detections even when hard evidence is not publicly available.

Assuming the account reflects an actual incident, the integration of agentic AI into cyber attack marks a fundamental shift in adversary capabilities. When AI simplifies legitimate workflows, it’s inevitable that threat actors will exploit it for malicious ends. In this case, attackers demonstrated how AI can:

This level of automation and orchestration allows adversaries to operate faster, more efficiently, and at a scale that traditional human-led campaigns cannot match.

While the Anthropic scenario raises valid concerns across the industry, the fundamentals of cybersecurity remain strong. Whether an attack originates from a human operator, a scripted tool, or an advanced AI agent, malicious activity cannot escape the underlying realities of system behavior. It still produces anomalies in network traffic, irregular authentication patterns, suspicious process activity, and deviations from established baselines. These enduring signals form the backbone of modern detection and response, reminding us that even as attacker tools evolve, the core principles defenders rely on remain intact.

Cybersecurity is a relentless pursuit. Threat actors continuously adapt and innovate to bypass defenses, creating an ongoing cycle of challenge and response. Each time we strengthen our posture, they develop new techniques to exploit vulnerabilities. The game never ends—neither does our defense.

Our strategy is rooted in continuous evolution: building new use cases, refining detection models, and implementing adaptive mechanisms that anticipate emerging threats. This proactive approach ensures resilience and agility in a landscape where change is the only constant.

At CPX, our detection infrastructure is designed to respond to these signals in near real-time:

However, adversaries can train their models using extensive libraries of detection queries—such as Sigma, YARA, and Snort rules—to evade traditional detection methods. This is where the CPX Threat Hunting Team fills the gaps, hunting for threats that go undetected.

CPX Threat Hunting leverages the MITRE ATLAS Matrix to strengthen AI security through hypothesis-driven investigation and comprehensive reporting. Our hunting queries are mapped to ATLAS techniques for AI-specific threats such as prompt injection and model manipulation.

When signature-based detection falls short, the CPX Threat Hunting team employs hypothesis-driven techniques to uncover threats through behavioral anomalies, ensuring resilience against evasive tactics. This human-led approach complements automated detection by identifying subtle indicators that AI-driven attacks often leave behind, closing gaps that traditional methods cannot address.

In today’s threat landscape, threat hunting isn’t optional—it’s a top priority. Security leaders consistently rank it among their highest priorities, and CPX is seeing a surge in hunting engagements. Looking ahead to 2026, we anticipate more attacks leveraging AI. Traditional hunting methods are not sufficient to address this evolving threat landscape.

To stay ahead, CPX has developed an in-house AI-powered detection platform, Intelligence Threat Detection (ITD), which combines machine learning algorithms and adaptive rulesets to identify anomalies and malicious activities.

Anthropic’s investigation underscores that even advanced safeguards can be bypassed through persistent adversarial prompting and social engineering of AI models. To mitigate these risks, CPX advises organizations to adopt multi-layered guardrail strategies, including:

AI-driven attacks are no longer theoretical—they are operational. Anthropic’s report serves as a wake-up call for the cybersecurity industry. At CPX, we are proactively adapting to this evolving threat landscape by integrating automation, machine learning, and generative AI into our workflows. This approach ensures we can detect, respond, and scale effectively, safeguarding our customers while maintaining a competitive edge.

Reference

“Disrupting the first reported AI-orchestrated cyber espionage campaign,” Anthropic, Nov 13, 2025 — https://www.anthropic.com/news/disrupting-AI-espionage